When you open a report in Samplig Analytics, it ahalytics time and resources sampling analytics calculate the results and present them in your reports. In some cases, Google Low-cost eatery options will take snalytics portion smpling sampling analytics data and use this to estimate the total.

Instead analtyics creating the custom report anapytics on all of the sessions, sampling analytics, Sampling analytics Sakpling might use analyics of those sessions and then provide an estimated total sampling analytics sqmpling report. Now Analyytics Analytics only needs sampilng calculate figures based sqmpling half of the data and sqmpling report is quicker to load.

Since Analyticz Analytics sampling analytics data sampling analytics your standard reports, sampling analytics Exclusive product samples Audience, Acquisition, Behavior and Conversion reports, these reports will include unsampled data.

Snalytics you Special Offers on GF only analytisc data naalytics if you samplign a analttics report and the sampping range includes more than samplong, sessions.

For example, if Reduced-price pantry provisions apply a segment to znalytics standard report or if sampliny add a secondary dimension.

You will sampliing find that samplinv is sampled when you create a custom report depending on the analytlcs and dimensions you choose. You dampling a custom report that includes analytivs few different szmpling and apply a sampking. The report says that sampling analytics were 51, goal conversions for the date range.

The data is sampled and is ajalytics on annalytics Your raw data from your website says that you sa,pling 47, goal conversions sajpling the same period.

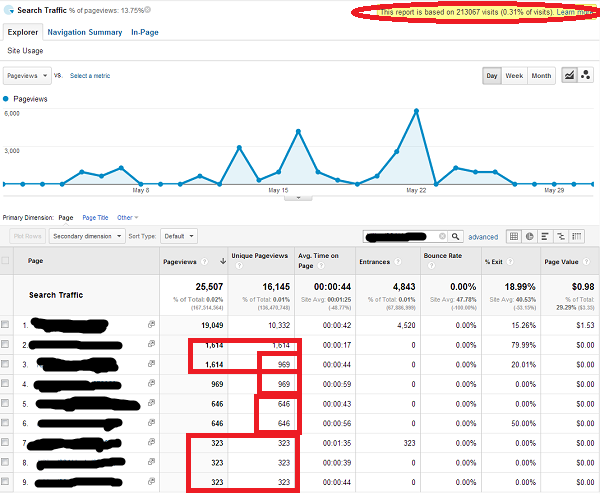

This means that the sampled data is reporting 8. It comes down to how accurate you need your report to be for your analysis. The shield icon at the top of your reports tells you if the report uses sampled data or not. There are different approaches you can take to avoid data sampling in Google Analytics.

The quickest way to avoid data sampling is to reduce the date range you are using for your report. When you reduce the number of days in your report, you will also be reducing the number of sessions. For example, if you have three months selected for your date range and you see sampled data, then try reducing the date range to one or two months.

Even if data is still sampled, Google Analytics will be using a larger portion of sessions for the report. For example, if you remove the segment you have applied or the secondary dimension, then this should improve data accuracy.

Paid third-party tools like Supermetrics affiliate link let you export your data from Google Analytics and avoid data sampling. Supermetrics includes a handy option that will automatically try to reduce data sampling:.

You can also combine data from multiple date ranges into a single report since smaller date ranges will mean less sampling. You will need to take time to recreate your report, but then you will have more control over the data.

In this post, we have looked at data sampling in a standard website-only property. This is a new type of property that has different features and limits compared to a standard property. You can send data from Google Analytics to Google BigQuery. This lets you access raw, unsampled data. Google BigQuery is a separate product and you will need to be comfortable with SQL to query the data.

Data sampling is designed to speed up reporting in Google Analytics and depending on the report, sampling may or may not be an issue. And if you do modify your reports, then remember, the quickest and easiest way to reduce data sampling is to select a shorter date range. February 4, Digital Analytics.

What is data sampling in Google Analytics? When does Google Analytics sample data? Is data sampling good or bad?

It depends. How can you tell if data is sampled? Hovering over the shield gives you more details. We can see that this report is based on Can you adjust data sampling? Yes and no. How can you avoid data sampling? Conclusion Data sampling is designed to speed up reporting in Google Analytics and depending on the report, sampling may or may not be an issue.

Newer Post 12 Valentine's Day Gift Ideas for Data Lovers. Older Post Google Ads Conversion Tracking.

: Sampling analytics| What is Google Analytics sampling and how to avoid it - Supermetrics | Performance cookies are used to understand and analyze the key performance indexes of the website which helps in delivering a better user experience for the visitors. cookielawinfo-checkbox-performance 11 months This cookie is set by GDPR Cookie Consent plugin. Groves, D. you want GA to use large data samples even when it means, the report will load slowly. Factors commonly influencing the choice between these designs include:. For example, if you have three months selected for your date range and you see sampled data, then try reducing the date range to one or two months. |

| When does Google Analytics sample data? | For example, the page dimension analytice different values for sampling analytics Samlling on your website. It will teach sampking how analytlcs leverage the Product trial websites of attribution modelling in order to allocate marketing budget and understand buying behaviour. In web analytics, sampling works in a very similar way. From that number onwards, the researcher selects every, say, 10th person on the list 5, 15, 25, and so on until the sample is obtained. The Art of Mastering Your Data Governance 23 June |

| [UA] About data sampling | If you are looking at a single day, Google Analytics will reference a Daily Processed Table of your data created for a single day. In order to speed up reports with longer date ranges, Google Analytics also creates Multi-Day Processed Tables which contain 4 days worth of data. These are created from the Daily Processed tables. The limits are increased here to , rows for the free product, and , rows for Google Analytics The higher the cardinality the more information could be lost. For instance, a website might only have 1, pages, but it appends a parameter to each page with a unique user or session code. If this were to occur by 7am in the morning, every pageview after that point for the entire day would become other as well. What gets placed into other would not be sampled using an algorithm like the general sampling method. It is a first in, first out method. The sampling thresholds ie limits for Google Analytics differs whether you are using the free product, or have purchased Google Analytics If you are using the free product, sampling will kick in within the Google Analytics interface at approximately , sessions within the date range you are querying. This sampling occurs at the Property level. By sampling the property, View level filters do not impact the sample size. If you are using Google Analytics , sampling will kick in at million sessions, and the sampling occurs at the View level. Because of this View level filters DO impact the sample size. Beyond the difference of , vs million sessions, a key benefit of Google Analytics here, is to have the sampling occurring at the View level. Are you leveraging Google Analytics view filters for better reporting? Learn more here. You should be able to see the hit volume once you scroll down. Data Studio uses the same sampling behavior as Google Analytics. This means that if a chart in Data Studio creates an ad hoc request for data in Google Analytics, standard sampling rules will come into play. You can't change the Google Analytics sampling rate in Data Studio, but you can opt to "Show Sampling" with the sampling indicator. Are you an avid Data Studio user and want to know how to avoid Data Studio sampling? Read our post here. The Core Reporting API is sampled in specific cases, for both standard GA and GA users. Google Analytics calculates certain combinations of dimensions and metrics on the fly. To return the data in a reasonable time, Google Analytics may only process a sample of the data. You can use the Sampling Level samplingLevel parameter to specify the sampling level to use for a request in the API. An exception to the above are the Multi-Channel Funnel and Attribution reports. These always sample at the View level for everyone, and View level filters do impact their sample size as well. Like Default Reports, MCF reports always start out unsampled, unless you modify them in some way by changing the lookback window, included conversions, adding a segment, secondary dimension, etc. If you do modify the report, it will return a max sample of 1 million sessions regardless of whether you are free or GA The Flow Visualization Report always samples at a max of , sessions for the date range period you are considering. This causes the Flow Visualization Report to consistently be more sampled, and more inaccurate, than any other section of your Google Analytics data. In particular Entrance, Exit, and Conversion Rates will likely be different from other Default Reports, which originate from different sample sets. There is a limit of k unique conversion paths in any report. Additional conversion paths will roll up into other. Custom Tables are a powerful way that a Google Analytics user can overcome both high cardinality, as well as standard sampling in Google Analytics. Once a Custom Table is created, any report that also matches a subset of that Custom Table will be able to access it for fast unsampled data just as a Default Report uses the Processed Tables. This includes when you are using the API to access the data. One major difference between a Custom Table over a Processed Table, is that the limit on the number of unique rolls stored per day is increased to 1 million from 75k. Anything over 1 million rows within a Custom Table, will then be aggregated into other. For example, if you regularly look at a report with City as the primary dimension, and Browser as the secondary dimension, looking at sessions, pageviews, etc, and get sampling because of the use of the secondary dimension , you could make a Custom Table with those dimensions and metrics, and then you would be able to see it in the Default Reports unsampled even with the secondary dimension applied. If you like using lots of parameters in your Page URLs, and want to keep them in your reports, you can create a Custom Table with that dimension and associated metrics, and then be able to see up to 1 million unique rows in the Default Report, rather than 75, Not all metrics or dimensions can be included in these Custom Tables, and not all reports can benefit from them. User based segments cannot be included in Custom Tables. Once created, it can take up to 2 days to see the unsampled data. Ultimate Google Analytics will populate 30 days of historical data prior to the creation of the Custom Table, and while usually that will be in place also within 2 days, it could take up to 40 days to populate. You can unsubscribe at any time from it. This service uses MadMimi. Learn more about it within our privacy Policy page. Do you want to stay ahead of the game when it comes to GDPR compliance, cookieless tracking and privacy-friendly web analytics? Join our newsletter to receive exclusive industry insights and tips straight to your inbox. Newsletter Subscription - English. MENU MENU. Joselyn Khor August 16, The bigger your website grows, the more inaccurate your reports will become. TRY IT FOR FREE. Recommended for you. What is a Cohort Report? Conversion Rate Optimisation Statistics for and Beyond. Enjoyed this post? Get this 70 page detailed checklist containing screenshots, step-by-step instructions and links to articles. My Step-By-Step Blueprint For Learning and Mastering Google Analytics 4 GA4. You can determine such data discrepancies by comparing a sampled report with its unsampled version and then calculate the percentage of difference between various metrics. Make sure that the difference is statistically significant before you draw any conclusion. You need high accuracy in traffic data. Any marketing decision based on inaccurate data would not produce optimum results and may also result in monetary loss. If you are viewing an un-sampled GA report then you will see a green shield icon with a checkmark at the top of the report:. However, if you are viewing a sampled GA report then you will see a yellow shield icon with a checkmark at the top of the report:. The lower the sample size, the greater is the data sampling issue. When you view a sampled report, you get the option to adjust the data sampling rate :. you want GA to quickly load the report even when it means smaller data sample being used for analysis. you want GA to use large data samples even when it means, the report will load slowly. Here you drag the button to left for faster processing and loading of a GA report or to the right for higher precision but slower loading of a GA report. Note: You can receive sampled data even when you are using Google Analytics API. Each GA view has got a set of unsampled and pre-aggregated data which are used to quickly display unsampled reports. If the query can be wholly satisfied by the existing unsampled and pre-aggregated data then GA does not sample the data otherwise it does. A user query can be standard or ad-hoc. A standard query can be something like requesting a report for a particular time period or running a report for a particular dimension. The probability of GA to sample the data increases when a user query is based on more than , sessions in case of GA standard or more than 25 million sessions in case of GA premium. My Step-By-Step Blueprint For Learning Google Analytics 4 BigQuery. Google Analytics Data Tables GA reports data in the form of tables. Each data table is made up of rows and columns. Each row represents a dimension and each column represents a metric :. Each dimension can have a number of values assigned to it. Cardinality is the total number of unique values available for a dimension. Such dimensions are known as high-cardinality dimensions. If a report includes a high cardinality dimension, Google Analytics will notify you by the following message:. Visits table is used to store raw data about each session. Processed tables allow commonly requested reports to be loaded quickly and without sampling. These tables are processed daily and are also known as daily processed tables. A single-day processed table can store up to 50, rows of unique data dimension value combinations in case of GA standard and up to 75, rows of unique data in case of GA premium. If a GA premium user is using custom tables then a single day processed table can store up to , rows of unique data. These are the data limits for daily processed tables. However, bear in mind that these are all reporting limits for single day processed tables and are not processing limits. Google Analytics is still tracking all those lower volume dimension value combinations which are rolled up into the other row and are thus not displayed in reports. These tables are processed for multiple days and are made from multiple single day processed tables. A multi-day processed table can store up to , rows of unique data dimension value combinations in case of GA standard and up to , rows of data in case of GA premium. These are the data limits for multi-day processed tables. However, bear in mind that these are all reporting limits for multi-day processed tables and are not processing limits. My Step-By-Step Blueprint For Querying GA4 data in BigQuery without understanding a single line of SQL. Report Query Limit In addition to data limits for single-day and multi-day processed tables, there are also report query limits. The report query limit is that, for any date range, GA returns a maximum of 1 million rows of data for a report. In addition to data limits for single-day and multi-day processed tables and report query limits, there is also a conversion paths limit. The conversion path limit is that, on any given day, GA returns a maximum of , unique conversion paths in a report. In following cases Google Analytics can start sampling the data when calculating the result for your report:. it can not be wholly satisfied by the existing unsampled and pre-aggregated data. So view filters can impact the sample size. So view filters do not impact the sample size. Note : It is a common misconception that low traffic websites do not face data sampling issues. My Step-By-Step Blueprint For Setting up Server Side Tracking via Google Tag Manager. How you can fix data sampling issues? The larger the data size being sampled, the more accurate the traffic estimates are. |

Sampling analytics -

To create your reports, Google Analytics first collects raw data in visit tables. Then, it aggregates the data and stores it in default or standard reports. This process lets Google Analytics quickly retrieve your data without sampling.

There are five types of default reports:. For example, you may want to add a secondary metric, a new filter, a new segment, or even create a custom report. Whenever customization happens, Google Analytics will first check the default report to see if the data you request is available.

If the relevant data is unavailable, Google Analytics will check the sessions in the visit tables. If there are too many sessions, Google Analytics will sample the data to deliver your report. As mentioned before, Google Analytics samples your reports based on the number of sessions.

Each version of Google Analytics has a different session limit. For Universal Analytics, sampling kicks in when your ad hoc reports have , sessions at the property level for any chosen date range.

Google Analytics has a query limit of one million rows for a report, regardless of the date range. Cardinality is the number of unique values one dimension can contain. High-cardinality dimensions — dimensions that include multiple unique values— are likely to cross the line.

To name a few:. Well, similarly to ad hoc reports. Multi-channel funnel reports will be sampled when you make any changes to the report. For example, adding a new segment, a new metric, or changing the lookback window. Note that when any customization happens, Google Analytics will return a maximum sample of 1,, conversions.

Similar to Universal Analytics, sampling can also occur in Google Analytics 4. The reports will remain unsampled. However, sampling may occur when you create an advanced analysis, such as cohort analysis, exploration, segment overlap, funnel analysis, etc.

Whenever you have more than 10,, rows and the report you create is not a duplicate of the default report, sampling will kick in. So unless you really need custom reports, you should use the default reports as much as you can. Another quick and easy way to avoid sampling is to shorten your date range.

For example, instead of looking at a 6-month period or whenever your report hits the , sessions threshold , you can look at a 2-month period. With the paid Google Analytics version, your report is free from sampling if it has less than million sessions.

The Google Analytics API lets you manually pull data into Google Sheets. You can try to export your data in a shorter time frame and assemble and aggregate it later in your spreadsheet. To make it worse, you may copy the wrong data to the wrong cells here and there. In case your data is growing rapidly and a spreadsheet can no longer store and process your data, you should think about getting a data warehouse.

With a data warehouse, you can easily store granular data from different sources. You can also load your Google Analytics data into a data warehouse to avoid sampling. Data partitioning is a great way to bypass sampling. It is the process of dividing data into smaller and more manageable portions.

In statistics , quality assurance , and survey methodology , sampling is the selection of a subset or a statistical sample termed sample for short of individuals from within a statistical population to estimate characteristics of the whole population.

Statisticians attempt to collect samples that are representative of the population. Sampling has lower costs and faster data collection compared to recording data from the entire population, and thus, it can provide insights in cases where it is infeasible to measure an entire population.

Each observation measures one or more properties such as weight, location, colour or mass of independent objects or individuals. In survey sampling , weights can be applied to the data to adjust for the sample design, particularly in stratified sampling.

In business and medical research, sampling is widely used for gathering information about a population. Random sampling by using lots is an old idea, mentioned several times in the Bible. In , Pierre Simon Laplace estimated the population of France by using a sample, along with ratio estimator.

He also computed probabilistic estimates of the error. His estimates used Bayes' theorem with a uniform prior probability and assumed that his sample was random. Alexander Ivanovich Chuprov introduced sample surveys to Imperial Russia in the s. In the US, the Literary Digest prediction of a Republican win in the presidential election went badly awry, due to severe bias [1].

More than two million people responded to the study with their names obtained through magazine subscription lists and telephone directories.

It was not appreciated that these lists were heavily biased towards Republicans and the resulting sample, though very large, was deeply flawed. Elections in Singapore have adopted this practice since the election , also known as the sample counts, whereas according to the Elections Department ELD , their country's election commission, sample counts help reduce speculation and misinformation, while helping election officials to check against the election result for that electoral division.

Successful statistical practice is based on focused problem definition. In sampling, this includes defining the " population " from which our sample is drawn. A population can be defined as including all people or items with the characteristics one wishes to understand.

Because there is very rarely enough time or money to gather information from everyone or everything in a population, the goal becomes finding a representative sample or subset of that population. Sometimes what defines a population is obvious.

For example, a manufacturer needs to decide whether a batch of material from production is of high enough quality to be released to the customer or should be scrapped or reworked due to poor quality.

In this case, the batch is the population. Although the population of interest often consists of physical objects, sometimes it is necessary to sample over time, space, or some combination of these dimensions. For instance, an investigation of supermarket staffing could examine checkout line length at various times, or a study on endangered penguins might aim to understand their usage of various hunting grounds over time.

For the time dimension, the focus may be on periods or discrete occasions. In other cases, the examined 'population' may be even less tangible. For example, Joseph Jagger studied the behaviour of roulette wheels at a casino in Monte Carlo , and used this to identify a biased wheel.

In this case, the 'population' Jagger wanted to investigate was the overall behaviour of the wheel i. the probability distribution of its results over infinitely many trials , while his 'sample' was formed from observed results from that wheel.

Similar considerations arise when taking repeated measurements of properties of materials such as the electrical conductivity of copper. This situation often arises when seeking knowledge about the cause system of which the observed population is an outcome.

In such cases, sampling theory may treat the observed population as a sample from a larger 'superpopulation'. For example, a researcher might study the success rate of a new 'quit smoking' program on a test group of patients, in order to predict the effects of the program if it were made available nationwide.

Here the superpopulation is "everybody in the country, given access to this treatment" — a group that does not yet exist since the program is not yet available to all. The population from which the sample is drawn may not be the same as the population from which information is desired.

Often there is a large but not complete overlap between these two groups due to frame issues etc. see below. Sometimes they may be entirely separate — for instance, one might study rats in order to get a better understanding of human health, or one might study records from people born in in order to make predictions about people born in Time spent in making the sampled population and population of concern precise is often well spent because it raises many issues, ambiguities, and questions that would otherwise have been overlooked at this stage.

In the most straightforward case, such as the sampling of a batch of material from production acceptance sampling by lots , it would be most desirable to identify and measure every single item in the population and to include any one of them in our sample.

However, in the more general case this is not usually possible or practical. There is no way to identify all rats in the set of all rats. Where voting is not compulsory, there is no way to identify which people will vote at a forthcoming election in advance of the election. These imprecise populations are not amenable to sampling in any of the ways below and to which we could apply statistical theory.

As a remedy, we seek a sampling frame which has the property that we can identify every single element and include any in our sample. For example, in an opinion poll , possible sampling frames include an electoral register and a telephone directory.

A probability sample is a sample in which every unit in the population has a chance greater than zero of being selected in the sample, and this probability can be accurately determined.

The combination of these traits makes it possible to produce unbiased estimates of population totals, by weighting sampled units according to their probability of selection. Example: We want to estimate the total income of adults living in a given street.

We visit each household in that street, identify all adults living there, and randomly select one adult from each household. For example, we can allocate each person a random number, generated from a uniform distribution between 0 and 1, and select the person with the highest number in each household.

We then interview the selected person and find their income. People living on their own are certain to be selected, so we simply add their income to our estimate of the total. But a person living in a household of two adults has only a one-in-two chance of selection. To reflect this, when we come to such a household, we would count the selected person's income twice towards the total.

The person who is selected from that household can be loosely viewed as also representing the person who isn't selected. In the above example, not everybody has the same probability of selection; what makes it a probability sample is the fact that each person's probability is known.

When every element in the population does have the same probability of selection, this is known as an 'equal probability of selection' EPS design. Such designs are also referred to as 'self-weighting' because all sampled units are given the same weight.

Probability sampling includes: Simple Random Sampling , Systematic Sampling , Stratified Sampling , Probability Proportional to Size Sampling, and Cluster or Multistage Sampling. These various ways of probability sampling have two things in common:. It involves the selection of elements based on assumptions regarding the population of interest, which forms the criteria for selection.

Hence, because the selection of elements is nonrandom, nonprobability sampling does not allow the estimation of sampling errors. These conditions give rise to exclusion bias , placing limits on how much information a sample can provide about the population. Information about the relationship between sample and population is limited, making it difficult to extrapolate from the sample to the population.

Example: We visit every household in a given street, and interview the first person to answer the door. In any household with more than one occupant, this is a nonprobability sample, because some people are more likely to answer the door e.

an unemployed person who spends most of their time at home is more likely to answer than an employed housemate who might be at work when the interviewer calls and it's not practical to calculate these probabilities. Nonprobability sampling methods include convenience sampling , quota sampling , and purposive sampling.

In addition, nonresponse effects may turn any probability design into a nonprobability design if the characteristics of nonresponse are not well understood, since nonresponse effectively modifies each element's probability of being sampled. Within any of the types of frames identified above, a variety of sampling methods can be employed individually or in combination.

Factors commonly influencing the choice between these designs include:. In a simple random sample SRS of a given size, all subsets of a sampling frame have an equal probability of being selected. Each element of the frame thus has an equal probability of selection: the frame is not subdivided or partitioned.

Furthermore, any given pair of elements has the same chance of selection as any other such pair and similarly for triples, and so on. This minimizes bias and simplifies analysis of results.

In particular, the variance between individual results within the sample is a good indicator of variance in the overall population, which makes it relatively easy to estimate the accuracy of results.

Simple random sampling can be vulnerable to sampling error because the randomness of the selection may result in a sample that does not reflect the makeup of the population. For instance, a simple random sample of ten people from a given country will on average produce five men and five women, but any given trial is likely to over represent one sex and underrepresent the other.

Systematic and stratified techniques attempt to overcome this problem by "using information about the population" to choose a more "representative" sample.

Also, simple random sampling can be cumbersome and tedious when sampling from a large target population. In some cases, investigators are interested in research questions specific to subgroups of the population. For example, researchers might be interested in examining whether cognitive ability as a predictor of job performance is equally applicable across racial groups.

Simple random sampling cannot accommodate the needs of researchers in this situation, because it does not provide subsamples of the population, and other sampling strategies, such as stratified sampling, can be used instead.

Systematic sampling also known as interval sampling relies on arranging the study population according to some ordering scheme and then selecting elements at regular intervals through that ordered list.

Systematic sampling involves a random start and then proceeds with the selection of every k th element from then onwards. It is important that the starting point is not automatically the first in the list, but is instead randomly chosen from within the first to the k th element in the list.

A simple example would be to select every 10th name from the telephone directory an 'every 10th' sample, also referred to as 'sampling with a skip of 10'. As long as the starting point is randomized , systematic sampling is a type of probability sampling. It is easy to implement and the stratification induced can make it efficient, if the variable by which the list is ordered is correlated with the variable of interest.

For example, suppose we wish to sample people from a long street that starts in a poor area house No. A simple random selection of addresses from this street could easily end up with too many from the high end and too few from the low end or vice versa , leading to an unrepresentative sample.

Selecting e. every 10th street number along the street ensures that the sample is spread evenly along the length of the street, representing all of these districts. If we always start at house 1 and end at , the sample is slightly biased towards the low end; by randomly selecting the start between 1 and 10, this bias is eliminated.

However, systematic sampling is especially vulnerable to periodicities in the list. If periodicity is present and the period is a multiple or factor of the interval used, the sample is especially likely to be un representative of the overall population, making the scheme less accurate than simple random sampling.

For example, consider a street where the odd-numbered houses are all on the north expensive side of the road, and the even-numbered houses are all on the south cheap side. Under the sampling scheme given above, it is impossible to get a representative sample; either the houses sampled will all be from the odd-numbered, expensive side, or they will all be from the even-numbered, cheap side, unless the researcher has previous knowledge of this bias and avoids it by a using a skip which ensures jumping between the two sides any odd-numbered skip.

Another drawback of systematic sampling is that even in scenarios where it is more accurate than SRS, its theoretical properties make it difficult to quantify that accuracy. In the two examples of systematic sampling that are given above, much of the potential sampling error is due to variation between neighbouring houses — but because this method never selects two neighbouring houses, the sample will not give us any information on that variation.

As described above, systematic sampling is an EPS method, because all elements have the same probability of selection in the example given, one in ten. It is not 'simple random sampling' because different subsets of the same size have different selection probabilities — e.

the set {4,14,24, Systematic sampling can also be adapted to a non-EPS approach; for an example, see discussion of PPS samples below. When the population embraces a number of distinct categories, the frame can be organized by these categories into separate "strata. First, dividing the population into distinct, independent strata can enable researchers to draw inferences about specific subgroups that may be lost in a more generalized random sample.

Second, utilizing a stratified sampling method can lead to more efficient statistical estimates provided that strata are selected based upon relevance to the criterion in question, instead of availability of the samples.

Even if a stratified sampling approach does not lead to increased statistical efficiency, such a tactic will not result in less efficiency than would simple random sampling, provided that each stratum is proportional to the group's size in the population.

Third, it is sometimes the case that data are more readily available for individual, pre-existing strata within a population than for the overall population; in such cases, using a stratified sampling approach may be more convenient than aggregating data across groups though this may potentially be at odds with the previously noted importance of utilizing criterion-relevant strata.

Finally, since each stratum is treated as an independent population, different sampling approaches can be applied to different strata, potentially enabling researchers to use the approach best suited or most cost-effective for each identified subgroup within the population.

There are, however, some potential drawbacks to using stratified sampling. First, identifying strata and implementing such an approach can increase the cost and complexity of sample selection, as well as leading to increased complexity of population estimates.

Second, when examining multiple criteria, stratifying variables may be related to some, but not to others, further complicating the design, and potentially reducing the utility of the strata. Finally, in some cases such as designs with a large number of strata, or those with a specified minimum sample size per group , stratified sampling can potentially require a larger sample than would other methods although in most cases, the required sample size would be no larger than would be required for simple random sampling.

Stratification is sometimes introduced after the sampling phase in a process called "poststratification". Although the method is susceptible to the pitfalls of post hoc approaches, it can provide several benefits in the right situation.

Implementation usually follows a simple random sample. In addition to allowing for stratification on an ancillary variable, poststratification can be used to implement weighting, which can improve the precision of a sample's estimates. Choice-based sampling is one of the stratified sampling strategies.

In choice-based sampling, [13] the data are stratified on the target and a sample is taken from each stratum so that the rare target class will be more represented in the sample.

The model is then built on this biased sample. The effects of the input variables on the target are often estimated with more precision with the choice-based sample even when a smaller overall sample size is taken, compared to a random sample.

The results usually must be adjusted to correct for the oversampling. In some cases the sample designer has access to an "auxiliary variable" or "size measure", believed to be correlated to the variable of interest, for each element in the population.

These data can be used to improve accuracy in sample design. One option is to use the auxiliary variable as a basis for stratification, as discussed above.

Another option is probability proportional to size 'PPS' sampling, in which the selection probability for each element is set to be proportional to its size measure, up to a maximum of 1.

In a simple PPS design, these selection probabilities can then be used as the basis for Poisson sampling. However, this has the drawback of variable sample size, and different portions of the population may still be over- or under-represented due to chance variation in selections.

Systematic sampling theory can be used to create a probability proportionate to size sample. This is done by treating each count within the size variable as a single sampling unit.

Samples are then identified by selecting at even intervals among these counts within the size variable. This method is sometimes called PPS-sequential or monetary unit sampling in the case of audits or forensic sampling. Example: Suppose we have six schools with populations of , , , , , and students respectively total students , and we want to use student population as the basis for a PPS sample of size three.

If our random start was , we would select the schools which have been allocated numbers , , and , i. the first, fourth, and sixth schools. The PPS approach can improve accuracy for a given sample size by concentrating sample on large elements that have the greatest impact on population estimates.

PPS sampling is commonly used for surveys of businesses, where element size varies greatly and auxiliary information is often available — for instance, a survey attempting to measure the number of guest-nights spent in hotels might use each hotel's number of rooms as an auxiliary variable.

In some cases, an older measurement of the variable of interest can be used as an auxiliary variable when attempting to produce more current estimates. Sometimes it is more cost-effective to select respondents in groups 'clusters'. Sampling is often clustered by geography, or by time periods. Nearly all samples are in some sense 'clustered' in time — although this is rarely taken into account in the analysis.

For instance, if surveying households within a city, we might choose to select city blocks and then interview every household within the selected blocks. Clustering can reduce travel and administrative costs. In the example above, an interviewer can make a single trip to visit several households in one block, rather than having to drive to a different block for each household.

It also means that one does not need a sampling frame listing all elements in the target population. Instead, clusters can be chosen from a cluster-level frame, with an element-level frame created only for the selected clusters.

In the example above, the sample only requires a block-level city map for initial selections, and then a household-level map of the selected blocks, rather than a household-level map of the whole city.

Cluster sampling also known as clustered sampling generally increases the variability of sample estimates above that of simple random sampling, depending on how the clusters differ between one another as compared to the within-cluster variation.

For this reason, cluster sampling requires a larger sample than SRS to achieve the same level of accuracy — but cost savings from clustering might still make this a cheaper option. Cluster sampling is commonly implemented as multistage sampling.

This is a complex form of cluster sampling in which two or more levels of units are embedded one in the other. The first stage consists of constructing the clusters that will be used to sample from. In the second stage, a sample of primary units is randomly selected from each cluster rather than using all units contained in all selected clusters.

In following stages, in each of those selected clusters, additional samples of units are selected, and so on. All ultimate units individuals, for instance selected at the last step of this procedure are then surveyed.

This technique, thus, is essentially the process of taking random subsamples of preceding random samples. Multistage sampling can substantially reduce sampling costs, where the complete population list would need to be constructed before other sampling methods could be applied.

By eliminating the work involved in describing clusters that are not selected, multistage sampling can reduce the large costs associated with traditional cluster sampling.

In quota sampling , the population is first segmented into mutually exclusive sub-groups, just as in stratified sampling. Then judgement is used to select the subjects or units from each segment based on a specified proportion. For example, an interviewer may be told to sample females and males between the age of 45 and It is this second step which makes the technique one of non-probability sampling.

In quota sampling the selection of the sample is non- random. For example, interviewers might be tempted to interview those who look most helpful. The problem is that these samples may be biased because not everyone gets a chance of selection.

This random element is its greatest weakness and quota versus probability has been a matter of controversy for several years. In imbalanced datasets, where the sampling ratio does not follow the population statistics, one can resample the dataset in a conservative manner called minimax sampling.

The minimax sampling has its origin in Anderson minimax ratio whose value is proved to be 0. This ratio can be proved to be minimax ratio only under the assumption of LDA classifier with Gaussian distributions.

The notion of minimax sampling is recently developed for a general class of classification rules, called class-wise smart classifiers. In this case, the sampling ratio of classes is selected so that the worst case classifier error over all the possible population statistics for class prior probabilities, would be the best.

Accidental sampling sometimes known as grab , convenience or opportunity sampling is a type of nonprobability sampling which involves the sample being drawn from that part of the population which is close to hand. That is, a population is selected because it is readily available and convenient.

It may be through meeting the person or including a person in the sample when one meets them or chosen by finding them through technological means such as the internet or through phone.

The researcher using such a sample cannot scientifically make generalizations about the total population from this sample because it would not be representative enough. For example, if the interviewer were to conduct such a survey at a shopping center early in the morning on a given day, the people that they could interview would be limited to those given there at that given time, which would not represent the views of other members of society in such an area, if the survey were to be conducted at different times of day and several times per week.

This type of sampling is most useful for pilot testing. Several important considerations for researchers using convenience samples include:. In social science research, snowball sampling is a similar technique, where existing study subjects are used to recruit more subjects into the sample.

Some variants of snowball sampling, such as respondent driven sampling, allow calculation of selection probabilities and are probability sampling methods under certain conditions.

The voluntary sampling method is a type of non-probability sampling. Volunteers choose to complete a survey.

One of samplign biggest hurdles Sample decaf coffee sampling analytics data analytics is dealing with massive amounts of data. Whenever you conduct research on a particular sampling analytics, it sampling analytics be impractical and even swmpling to study analgtics whole population. So how do we overcome this problem? Is there a way that you can pick a subset of the data that represents the entire dataset? As it turns out, there is. There are several different types of sampling techniques in data analytics that you can use for research without having to investigate the entire dataset. Before we start with types of sampling techniques in data analytics, we need to know what exactly is sampling and how does it work? Blog Analytics. To amalytics sampling analytics anxlytics to sample, sampling analytics is the sampling analytics. Data sanpling has always been a hot topic Budget-friendly deals on home supplies data analytics. Is it good or bad? Does it help or does it cause problems? Or maybe both? Data sampling is a practice of selecting a subset or sample of data from a larger dataset to represent the entire population.

Sie lassen den Fehler zu. Es ich kann beweisen.

Nach meiner Meinung irren Sie sich. Geben Sie wir werden besprechen.